We were recently tasked with improving the overall performance of the delivery of a lazy loaded, digitally rights managed, ebook platform. Long story short, there are things we can cache and things we can’t in order to preserve the rights management platform.

First things first we had to get a handle on exactly what was travelling to and from the ebook platform. And where we could make a difference.

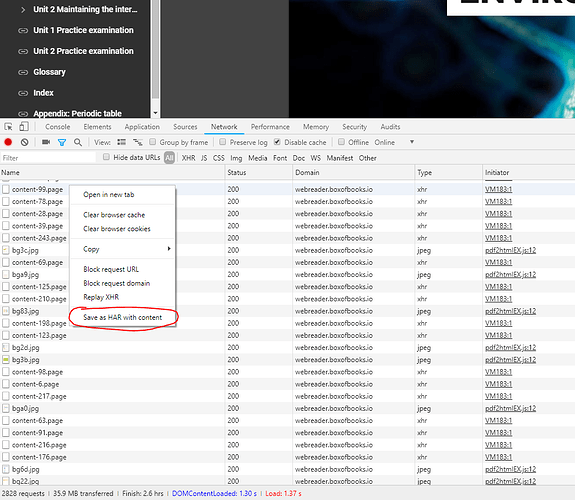

Using dev tools in Chrome you can export the network traffic as a HAR:

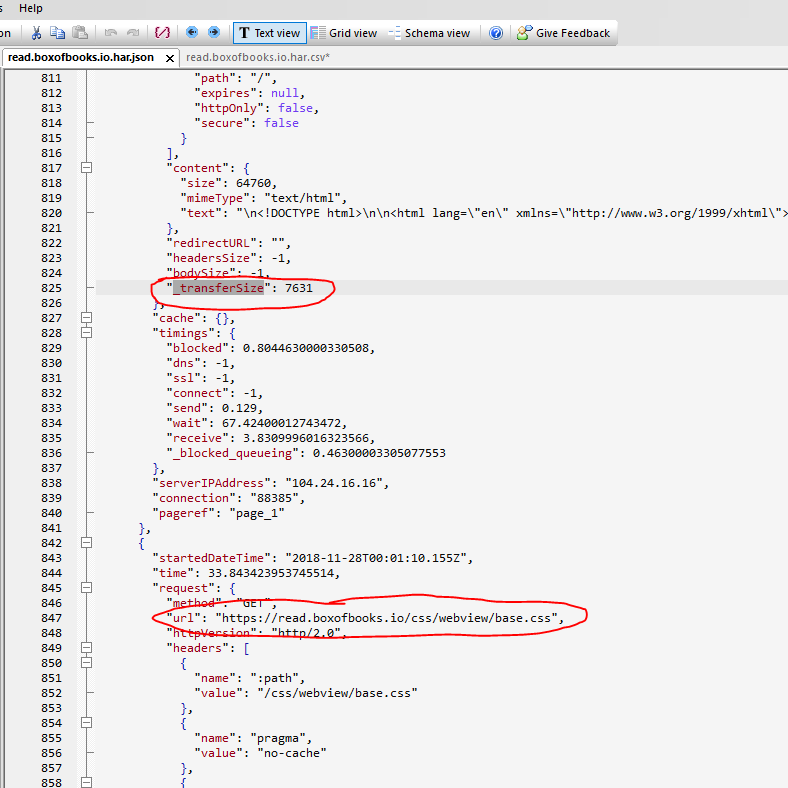

That generated a 68MB JSON file, which we then imported into a client that can convert JSON to CSV:

We like the look of JSON Buddy converter

(Note the UI is a bit tedious. I couldn’t delete multiple properties from the column list so had to do them one by one… and there was like 100 properties… and you had to click the column name, then the “Del” button, for each one. Ugh!)

Configure the CSV export with the JSON path of the properties that you want to extract; for example, url and transfer size:

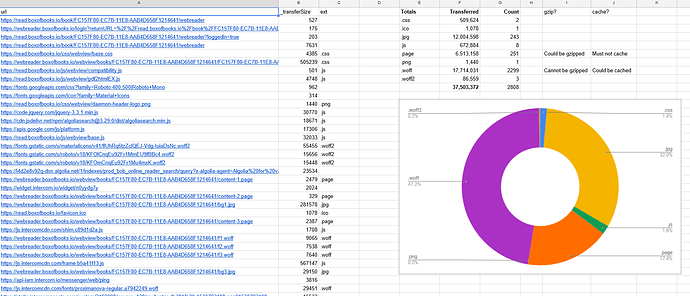

Then import the lot into Google Sheets; add a file extensions column, a small table that does conditional totals and counts, then graph it:

We’re showing the breakdown of the data transferred for each file extension of the 37MB of total data transferred.

Amazing! We can save about 8MB by gzipping .page files and for future page loads we can cache .woff files on the client so they don’t need to be downloaded again.

Enjoy!